Top 7 Strategies for Data Scientists to Stay Updated on AI Developments

- Follow researchers and influencers on social media

- Leverage online resources

- Participate in events and webinars

- Learn new skills and advanced tools

- Network with peers and experts

- Experiment and innovate

- Stay curious and open-minded

Website references to stay up-to-date

Empower Your Data Science Journey with Advanced AI Tools

FAQs (Frequently Asked Questions)

- How do I stay up to date with AI as a data scientist

- What is AI and why should I keep up with it?

- Where can I find beginner-friendly updates on AI?

- How do you keep track of AI development?

- What is the future if data science and artificial intelligence

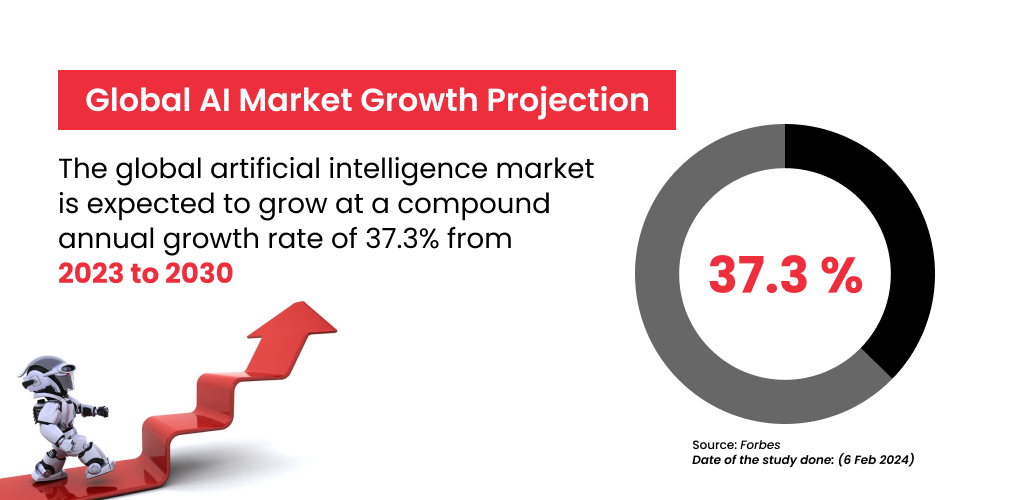

Did you know that according to a recent study done by Forbes, the global artificial intelligence market is expected to grow at a compound annual growth rate of 37.3% from 2023 to 2030?

This proves that industries must stay up to date with the latest developments in AI to expand and grow exponentially. When it comes to the fast-paced and dynamic field of data science, where constant learning and adaptation are the sole reasons for surviving in this competitive world, it’s more significant than ever to keep up with the advanced technologies in the market.

Are you someone who’s looking for reliable tips and resources to help you stay ahead of the curve? Then this comprehensive guide is just the right one to give you more clarity on how to win as a data scientist in today’s evolving landscape.

Top 7 Strategies for Data Scientists to Stay Updated on AI Developments

In this blog, you will discover the practical strategies and helpful resources to empower you in your journey as a data scientist:

1. Follow researchers and influencers on social media:

One of the best and easiest ways to stay updated on artificial intelligence developments is by following researchers and influencers on social media

- Follow notable data scientist influencers like Andrew NG, Sudalai Rajkumar, Kunal Jain, and Fei-Fei Li, to gain a wealth of insights and resources and stay informed about recent technologies and applications through their posts.

- Follow researchers to share their latest publications, findings, and insights, all revolving around the most recent tech trends.

- Gain firsthand knowledge on future trends or even expert opinions and discussions that will help you broaden your perspective.

List of Top 10 Notable data scientist influencers to follow in 2024:

Source: https://airbyte.com/blog/top-data-influencers

| Sl. No. | Names of Top 10 Data Scientist Influencers |

| 1. | Andrew NG |

| 2. | Kirk Borne |

| 3. | Ben Rogojan |

| 4. | Ronald VanLoon |

| 5. | Andriy Burkov |

| 6. | Barr Moses |

| 7. | Deepak Goyal |

| 8. | Vincent Granville |

| 9. | Bernard Marr |

| 10. | Allie K. Miller |

2. Leverage online resources

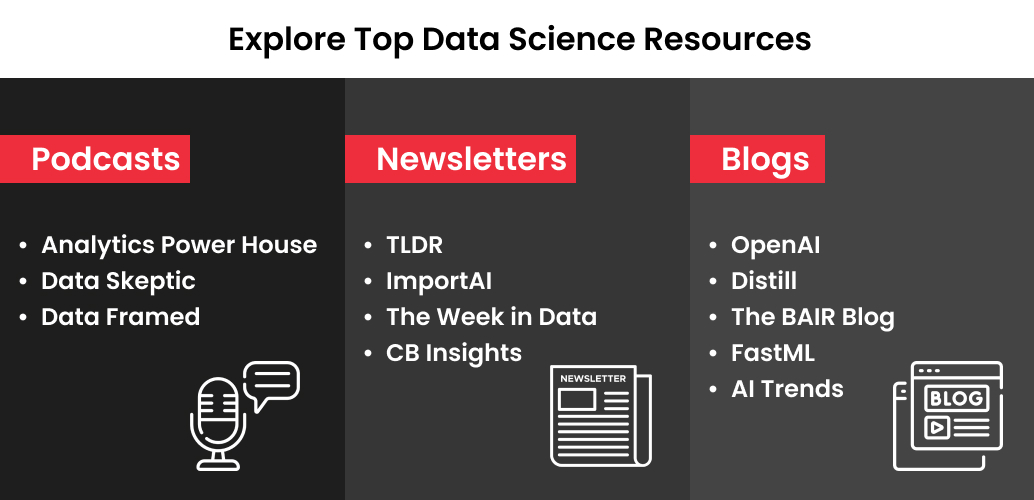

Another one of the most effective ways to stay informed in the field of data science is by leveraging online resources to access a wealth of insights, tips, guides, and interviews from leading industry experts.

- Online resources like blogs, podcasts, and newsletters, offer a wealth of insights, tips, guides, and interviews from the leading industry experts about the happenings in the data science industry.

Examples include:

- Popular data-science podcasts like Analytics powerhouse, Data Skeptic, Data framed

- Newsletters that provide interesting facts and insights on data science like TLDR, ImportAI, The Week in Data, and CB Insights

- Blogs like, OpenAI, Distill, The BAIR Blog, FastML, AI Trends

3. Participate in events and webinars

Connecting with industry professionals is an effective way of gaining insights into key trends of artificial intelligence. One perfect avenue to achieve this is by actively participating in webinars and events,

- Webinars and events are platforms that offer great opportunities for aspiring and established data scientists.

- Data scientists get the chance to learn from experts, network with peers, and discover the recent trends unfolding in the industry.

- Webinars are also known to inspire knowledge seekers by displaying real-world case studies.

Some examples of data science webinars, workshops, and events include:

- Data Science Salon: A comprehensive series of virtual and in-person data science events

- DataFest: A festival comprising data science workshops, hackathons and discussions with experts

4. Learn new skills and advanced tools:

Equipping yourself with new skills and learning new tools will help you easily navigate any challenges in the industry.

- Specializations offered by leading institutes can help you learn the right skills and tools.

- For a well-rounded skill set, focus on courses that provide skills such as data analysis, data engineering, data science ethics, and artificial intelligence and machine learning.

Examples of some of the best data science courses in India include:

- upGrad Campus’s Certification Program in Data Science & Analytics and Job-ready program in Data Science and Artificial Intelligence.

- Great learning’s PG program in artificial intelligence and machine learning.

- AnalytixLabs course in data science and machine learning.

5. Network with peers and experts

Who better to approach for the latest artificial intelligence developments than experts and peers?

- Network with your mentors and peers on social media platforms, events and webinars, and online webinars, to gain practical insights and the latest updates on AI.

- Networking gives you a great opportunity to exchange ideas, ask questions, and get feedback. It further plays a key role in keeping you up to date on AI developments.

6. Experiment and innovate:

The dynamic nature of artificial intelligence is the reason why you should keep experimenting and innovating. Here’s how you can do so:

- Engage in hands-on personal projects to test your knowledge of AI algorithms

- Participate in hackathons and open-source projects to acquire practical experience by tackling real-world problems

- Stay curious and keep exploring new tools, frameworks, and approaches to adapt to the AI trends

7. Stay curious and open-minded:

Cultivating curiosity and open-mindedness is deemed crucial in an industry that keeps evolving. Here are a few simple ways to do it:

- Remain curious and keep an eye out on all the above-mentioned resources to stay up to date about the AI realm.

- Indulge yourself in lifelong learning by regularly reading books, news articles, and academic papers.

- Don’t hesitate to ask questions and seek new information or clarification while encountering any challenges.

Website references for staying up-to-date:

Here’s a curated list of a plethora of valuable resources for data scientists who aim to stay informed about everything unfolding in the AI world.

Twitter (X):

Learn directly from top data scientists and influencers who have played a pivotal role in educating the world about updates in the data science realm.

Here is a list of influential Twitter accounts that you can follow for AI updates:

| Sl.No. | Names of the influential Twitter accounts |

| 1. | Allie K. Miller |

| 2. | Soumith Chintala |

| 3. | Fei-Fei Li |

| 4. | Lilian Weng |

| 5. | Berkeley AI Research |

Medium:

Medium is a great platform for influential figures to pen down their thoughts and ideas about the latest developments occurring in various industries. Here’s a list of medium websites for your future reference to gain data science insights:

| Sl.No. | Name of the Source |

| 1. | Towards Data Science |

| 2. | Cassie Kozyrkov |

| 3. | Dario Dario Radečić |

Youtube:

Another valuable resource is YouTube. It’s a knowledge portal for all those seeking in-depth information about anything that’s trending in the market and it serves as a great platform for those who are visual learners.

Empower Your Data Science Journey with Advanced AI Tools

Emerging into a career like data science and artificial intelligence demands a proactive approach and a deep dedication to learning and equipping yourself with the right AI tools. Learning in-demand skills like Machine learning, Python, and advanced AI is an absolute necessity to thrive as a data scientist.

This is your chance to master your journey as a data scientist by exploring data science and artificial intelligence courses offered by upGrad Campus. upGrad Campus’ AI/ML courses with placement are taught by industry experts who provide the most comprehensive curriculum and advanced tools that guarantee a successful career in data science and AI.

Start your journey with upGrad Campus today and get ready to transform your future.

Recommended article: Types of Data Sets in Data Science

FAQs (Frequently Asked Questions):

How do I stay up to date with AI as a data scientist?

To stay up to date on industry trends like AI as a data scientist, you must regularly read and subscribe to relevant industry blogs on Medium and newsletters like Rundown AI. You can also keep tabs on events like KDD, CVPR, and ACL and follow LinkedIn influencers to get constant first-hand updates from them.

Enrolling in online courses is another effective way for both beginners and professionals to stay updated on AI as data scientists. Some of the popular online courses include UpGrad’s data science course, Coursera, MOOCs, and DataCamp.

What is AI and why should I keep up with it?

AI (Artificial Intelligence) enables machines to learn from experiences, adjust to new input, and perform human-like tasks. Most AI examples that you come across today, such as robot waiters, and self-driving cars, all of them heavily rely on deep learning and natural language processing.

Where can I find beginner-friendly updates on AI?

IEEE Spectrum – an award-winning website, is a great source of AI industry news that helps you to understand artificial intelligence developments in automobiles, robotics, finance, healthcare, and various other fields.

How do you keep track of AI development?

One of the simplest ways to keep track of AI developments would be through subscriptions to newsletters and blogs that summarise the most trending and important papers, news, and events.

What is the future of data science and artificial intelligence?

The data science market will reach 178 billion dollars by 2025, while AI will rise 13.7% to USD 202.57 billion by 2026. Today, Both data science AI benefits companies across industries, and its demand is only expected to grow as mentioned above.

Don’t forget to watch: